Paul Graham

Accelerating Python on GPUs

#1about 2 minutes

The rise of general-purpose GPU computing

NVIDIA's evolution from a graphics hardware company to a leader in general-purpose computing was accelerated by the use of GPUs for AI with models like AlexNet.

#2about 4 minutes

Why GPUs outperform CPUs for parallel tasks

As single-threaded CPU performance plateaued, GPUs offered a path forward with their massively parallel architecture designed for simultaneous computation.

#3about 6 minutes

Understanding modern GPU architecture and operation

GPUs work with CPUs by offloading compute-intensive code and use thousands of threads to hide memory latency, leveraging streaming multiprocessors and high-bandwidth memory.

#4about 7 minutes

Introducing the CUDA parallel computing platform

The CUDA platform is a complete ecosystem with compilers, libraries, and frameworks that enables developers to program GPUs using various languages and abstraction levels.

#5about 3 minutes

Leveraging specialized hardware like Tensor Cores

Specialized hardware like Tensor Cores can be used transparently through high-level libraries like cuDNN or programmed directly with low-level APIs for maximum performance.

#6about 6 minutes

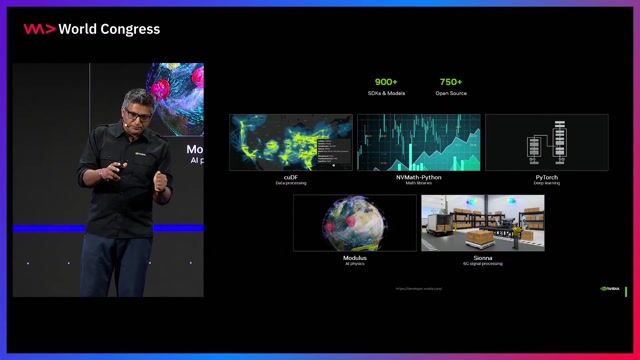

High-level frameworks for domain-specific acceleration

Frameworks like Rapids provide GPU-accelerated, drop-in replacements for popular data science libraries such as Pandas (cuDF) and NetworkX (cuGraph) with minimal code changes.

#7about 10 minutes

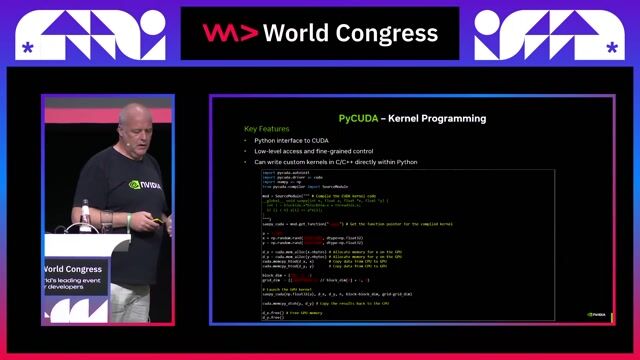

A progressive approach to programming GPUs in Python

Developers can choose from a spectrum of Python libraries, from simple drop-in replacements like CuNumeric and CuPy to JIT compilers like Numba and direct kernel programming with PyCUDA.

#8about 6 minutes

Developer tools and learning resources for GPUs

NVIDIA offers a comprehensive suite of developer tools for profiling and debugging, along with learning resources like the NGC repository, DLI courses, and community events.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

01:07 MIN

The evolution of GPU programming with Python

Accelerating Python on GPUs

05:12 MIN

Boosting Python performance with the Nvidia CUDA ecosystem

The weekly developer show: Boosting Python with CUDA, CSS Updates & Navigating New Tech Stacks

01:40 MIN

A spectrum of approaches for programming GPUs in Python

Accelerating Python on GPUs

02:47 MIN

Understanding accelerated computing and GPU parallelism

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

02:11 MIN

Profiling and debugging GPU-accelerated Python code

Accelerating Python on GPUs

02:56 MIN

Using high-level frameworks like Rapids for acceleration

Accelerating Python on GPUs

01:33 MIN

A look at upcoming Python GPU programming tools

Accelerating Python on GPUs

04:05 MIN

Using NVIDIA libraries to easily accelerate applications

WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Featured Partners

Related Videos

22:18

22:18Accelerating Python on GPUs

Paul Graham

24:39

24:39Accelerating Python on GPUs

Paul Graham

21:02

21:02CUDA in Python

Andy Terrel

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

33:44

33:44Concurrency in Python

Fabian Schindler

57:49

57:49Vectorize all the things! Using linear algebra and NumPy to make your Python code lightning fast.

Jodie Burchell

46:30

46:3030 Golden Rules of Deep Learning Performance

Anirudh Koul

37:32

37:32Coffee with Developers - Stephen Jones - NVIDIA

Stephen Jones

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

CONTIAMO GMBH

Berlin, Germany

Senior

Python

Docker

TypeScript

PostgreSQL

MedAustron EBG

€46K

GIT

NumPy

Pandas

Data analysis

+1