Anshul Jindal & Martin Piercy

Your Next AI Needs 10,000 GPUs. Now What?

#1about 2 minutes

Introduction to large-scale AI infrastructure challenges

An overview of the topics to be covered, from the progress of generative AI to the compute requirements for training and inference.

#2about 4 minutes

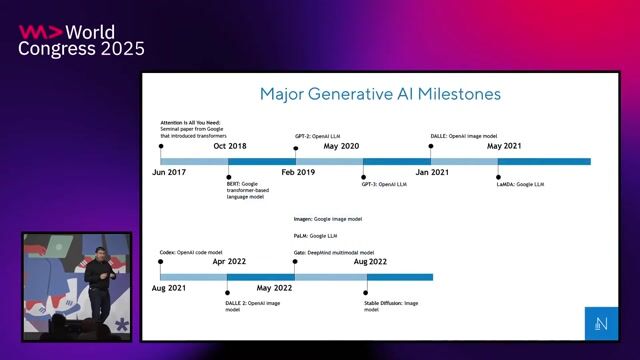

Understanding the fundamental shift to generative AI

Generative AI creates novel content, moving beyond prediction to unlock new use cases in coding, content creation, and customer experience.

#3about 6 minutes

Using NVIDIA NIMs and blueprints to deploy models

NVIDIA Inference Microservices (NIMs) and blueprints provide pre-packaged, optimized containers to quickly deploy models for tasks like retrieval-augmented generation (RAG).

#4about 4 minutes

An overview of the AI model development lifecycle

Building a production-ready model involves a multi-stage process including data curation, distributed training, alignment, optimized inference, and implementing guardrails.

#5about 6 minutes

Understanding parallelism techniques for distributed AI training

Training massive models requires splitting them across thousands of GPUs using tensor, pipeline, and data parallelism to manage compute and communication.

#6about 2 minutes

The scale of GPU compute for training and inference

Training large models like Llama requires millions of GPU hours, while inference for a single large model can demand a full multi-GPU server.

#7about 3 minutes

Key hardware and network design for AI infrastructure

Effective multi-node training depends on high-speed interconnects like NVLink and network architectures designed to minimize communication latency between GPUs.

#8about 3 minutes

Accessing global GPU capacity with DGX Cloud Lepton

NVIDIA's DGX Cloud Lepton is a marketplace connecting developers to a global network of cloud partners for scalable, on-demand GPU compute.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

01:40 MIN

The rise of general-purpose GPU computing

Accelerating Python on GPUs

02:27 MIN

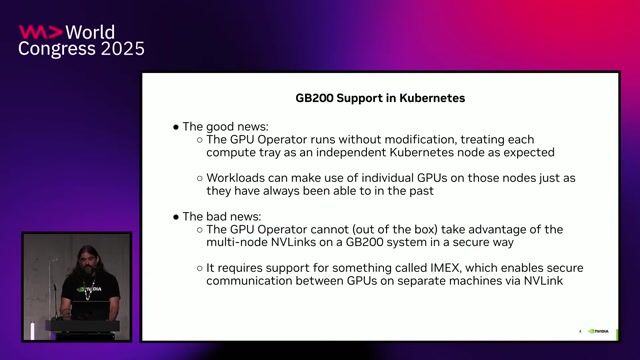

Understanding the NVIDIA GB200 supercomputer architecture

A Deep Dive on How To Leverage the NVIDIA GB200 for Ultra-Fast Training and Inference on Kubernetes

01:46 MIN

Accessing software, models, and training resources

Accelerating Python on GPUs

03:07 MIN

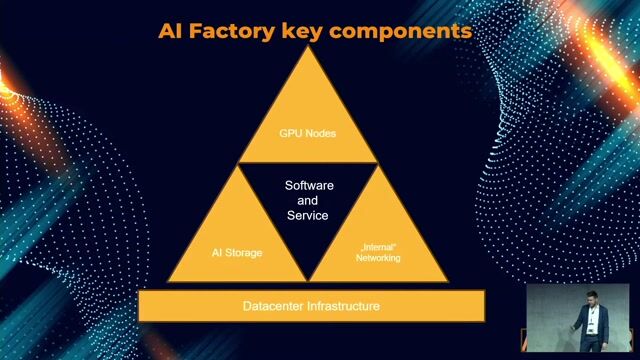

Building an AI factory with all the essential components

AI Factories at Scale

01:51 MIN

Overview of the NVIDIA AI Enterprise software platform

Efficient deployment and inference of GPU-accelerated LLMs

01:24 MIN

The evolution of GPUs from graphics to AI computing

Accelerating Python on GPUs

01:04 MIN

NVIDIA's platform for the end-to-end AI workflow

Trends, Challenges and Best Practices for AI at the Edge

02:27 MIN

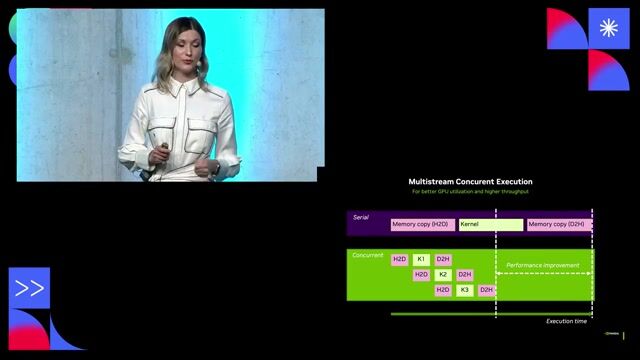

A look inside the NIM container architecture

Efficient deployment and inference of GPU-accelerated LLMs

Featured Partners

Related Videos

22:07

22:07WWC24 - Ankit Patel - Unlocking the Future Breakthrough Application Performance and Capabilities with NVIDIA

Ankit Patel

32:19

32:19A Deep Dive on How To Leverage the NVIDIA GB200 for Ultra-Fast Training and Inference on Kubernetes

Kevin Klues

23:01

23:01Efficient deployment and inference of GPU-accelerated LLMs

Adolf Hohl

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

24:26

24:26AI Factories at Scale

Thomas Schmidt

32:27

32:27Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Christian Liebel

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

18:33

18:33From foundation model to hosted AI solution in minutes

Kevin Klues

Related Articles

View all articles

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

BWI GmbH

Senior

Linux

DevOps

Ansible

Terraform

Kubernetes