Nico Martin

From ML to LLM: On-device AI in the Browser

#1about 2 minutes

Using machine learning to detect verbal filler words

A personal project to detect and count filler words in Swiss German speech highlights the limitations of standard speech-to-text APIs.

#2about 2 minutes

Comparing TensorFlow.js backends for performance

TensorFlow.js performance depends on the chosen backend, with WebGPU offering significant speed improvements over CPU, WebAssembly, and WebGL.

#3about 2 minutes

Real-time face landmark detection with WebGPU

A live demo showcases how the WebGPU backend in TensorFlow.js achieves 30 frames per second for face detection, far outpacing CPU and WebGL.

#4about 1 minute

Building a browser extension for gesture control

A Chrome extension uses a hand landmark detection model to enable website navigation and interaction through pinch gestures.

#5about 2 minutes

Training a custom speech model with Teachable Machine

Teachable Machine provides a no-code interface to train a custom speech command model directly in the browser for recognizing specific words.

#6about 2 minutes

The technical challenges of running LLMs in browsers

To run LLMs on-device, we must understand their internal workings, from tokenizers that convert text to numbers to the massive model weights.

#7about 2 minutes

Reducing LLM size for browser use with quantization

Quantization is a key technique for reducing the file size of LLM weights by using lower-precision numbers, making them feasible for browser deployment.

#8about 2 minutes

Running on-device models with the WebLLM library

The WebLLM library, powered by Apache TVM, simplifies the process of loading and running quantized LLMs directly within a web application.

#9about 2 minutes

A live demo of on-device text generation

A markdown editor demonstrates fast, local text generation using the Gemma 2B model, with all processing happening in the browser without cloud requests.

#10about 1 minute

Mitigating LLM hallucinations with RAG

Retrieval-Augmented Generation (RAG) improves LLM accuracy by providing relevant source documents alongside the user's prompt to ground the response in facts.

#11about 3 minutes

Building an on-device RAG solution for PDFs

A demo application shows how to implement a fully client-side RAG system that processes a PDF and uses vector embeddings to answer questions.

#12about 1 minute

Forcing an LLM to admit when it doesn't know

By instructing the model to only use the provided context, a RAG system can reliably respond that it doesn't know the answer if it's not in the source document.

#13about 2 minutes

The future of on-device AI hardware and APIs

The performance of on-device AI is heavily hardware-dependent, but future improvements in chips (NPUs) and browser APIs like WebNN will broaden access.

#14about 2 minutes

Key benefits of running AI in the browser

Browser-based AI offers significant advantages including privacy by default, zero installation, high interactivity, and infinite scalability since users provide the compute.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:20 MIN

The technology behind in-browser AI execution

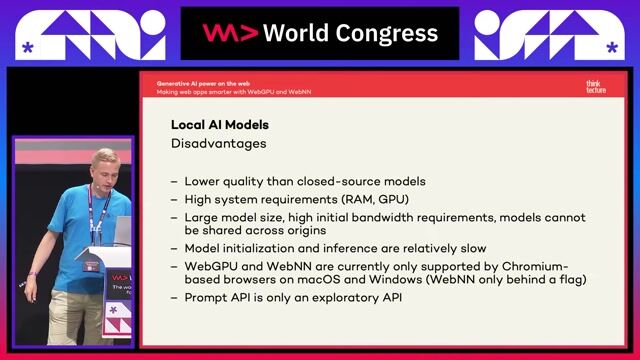

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

01:41 MIN

Two primary approaches for browser-based AI

Prompt API & WebNN: The AI Revolution Right in Your Browser

03:24 MIN

Running on-device AI in the browser with Gemini Nano

Exploring Google Gemini and Generative AI

01:14 MIN

The future of on-device AI in web development

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

09:27 MIN

Performing inference in the browser with ONNX Runtime Web

Making neural networks portable with ONNX

12:42 MIN

Running large language models locally with Web LLM

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

04:31 MIN

Introducing generative AI in the browser with Chrome AI

aa

00:59 MIN

Building a custom voice AI with WebRTC and Google APIs

Raise your voice!

Featured Partners

Related Videos

30:36

30:36Prompt API & WebNN: The AI Revolution Right in Your Browser

Christian Liebel

32:27

32:27Generative AI power on the web: making web apps smarter with WebGPU and WebNN

Christian Liebel

33:51

33:51Exploring the Future of Web AI with Google

Thomas Steiner

30:13

30:13Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

Maxim Salnikov

36:41

36:41aa

aa

34:34

34:34Vision for Websites: Training Your Frontend to See

Daniel Madalitso Phiri

38:24

38:24Machine learning in the browser with TensorFlowjs

Håkan Silfvernagel

1:01:03

1:01:03WeAreDevelopers LIVE - Is AI replacing developers?, Stopping bots, AI on device & more

Chris Heilmann & Daniel Cranney & Sebastian Gingter

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Nomitri

Berlin, Germany

DevOps

Gitlab

Docker

Ansible

Grafana

+6

score4more GmbH

Berlin, Germany

Remote

Intermediate

DevOps

TypeScript

Data analysis

Machine Learning

+2

Neural Concept

Lausanne, Switzerland

DevOps

Continuous Integration