Stanislas Girard

Chatbots are going to destroy infrastructures and your cloud bills

#1about 3 minutes

Comparing web developers and data scientists before GenAI

Before generative AI, web developers focused on CPU-bound tasks and horizontal scaling while data scientists worked with GPU-bound tasks and vast resources.

#2about 3 minutes

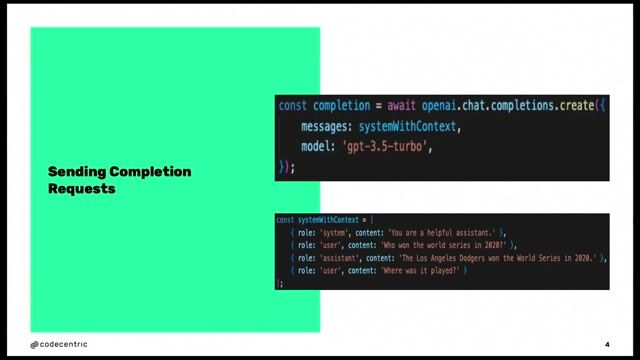

The new AI engineer role and the RAG pipeline

The emergence of the AI engineer role combines web development and data science skills, often applied to building RAG pipelines for data ingestion and querying.

#3about 2 minutes

Key architectural challenges in building GenAI apps

Generative AI applications face unique architectural problems, including long response times, sequential bottlenecks, and the difficulty of mixing CPU and GPU-bound processes.

#4about 3 minutes

How a simple chatbot evolves into a large monolith

Adding features like document ingestion and web scraping to a simple chatbot can rapidly increase its resource consumption and Docker image size, creating a complex monolith.

#5about 4 minutes

Refactoring a monolithic AI app into a service architecture

To manage complexity and cost, a monolithic AI application should be refactored by separating user-facing logic from heavy background tasks into distinct, independently scalable services.

#6about 3 minutes

Choosing the right architecture for your application's workload

A monolithic architecture is suitable for low or continuous workloads, while a service-based approach is necessary for applications with high or spiky traffic to manage costs and scale effectively.

#7about 2 minutes

Overlooked challenges of running AI applications in production

Beyond core architecture, running AI in production involves complex challenges like managing GPUs on Kubernetes, model versioning, data compliance, and testing non-deterministic outputs.

#8about 2 minutes

Using creative evaluations and starting with small models

A creative evaluation using a game like Street Fighter reveals that smaller, faster LLMs can outperform larger ones for many use cases, making them a better starting point.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

01:37 MIN

Introduction to large-scale AI infrastructure challenges

Your Next AI Needs 10,000 GPUs. Now What?

03:11 MIN

Using generative AI to enhance developer productivity

Throwing off the burdens of scale in engineering

06:46 MIN

Managing the rapid pace of AI development and its impact

From Monolith Tinkering to Modern Software Development

03:34 MIN

How generative AI is changing software development

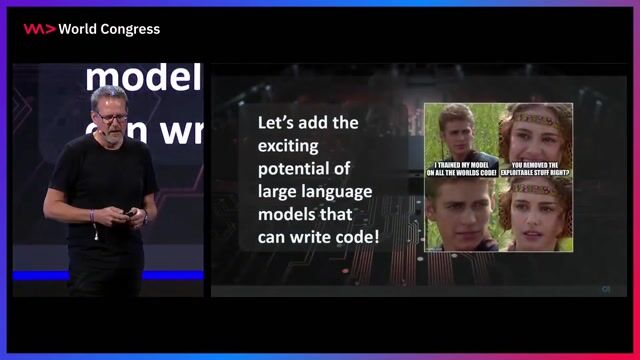

The transformative impact of GenAI for software development and its implications for cybersecurity

03:38 MIN

The impact of ChatGPT and the rise of chat interfaces

Innovating Developer Tools with AI: Insights from GitHub Next

02:59 MIN

Positioning generative AI as the next major technology shift

The Data Phoenix: The future of the Internet and the Open Web

04:34 MIN

Analyzing the risks and architecture of current AI models

Opening Keynote by Sir Tim Berners-Lee

02:24 MIN

Navigating the overwhelming wave of generative AI adoption

Developer Experience, Platform Engineering and AI powered Apps

Featured Partners

Related Videos

24:51

24:51Should we build Generative AI into our existing software?

Simon Müller

26:54

26:54Make it simple, using generative AI to accelerate learning

Duan Lightfoot

32:26

32:26Bringing the power of AI to your application.

Krzysztof Cieślak

31:12

31:12Using LLMs in your Product

Daniel Töws

32:13

32:13Supercharge your cloud-native applications with Generative AI

Cedric Clyburn

35:16

35:16How AI Models Get Smarter

Ankit Patel

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

25:47

25:47Performant Architecture for a Fast Gen AI User Experience

Nathaniel Okenwa

Related Articles

View all articles

.webp?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.