Snowflake Data Architect (all genders)

Role details

Job location

Tech stack

Job description

- Consult customers: You advise our customers on the architecture, implementation, and use of intelligent data solutions with the Snowflake AI Data Cloud (such as DataMesh, Datasharing, Marketplace).

- Strategic architecture and design: As an architect, you design future-proof cloud data architectures for Snowflake and ensure that the Cloud Data Platform is scalable, secure, and performant.

- Responsible for holistic build-up of data platforms: You ensure that CI/CD, test concepts, and infrastructure as code are consistently considered.

- Select and integrate technologies: You advise on the technological roadmap and strategy, support the selection and integration of third-party tools (dbt, Talend, Matillion, etc.), and implement Snowflake features.

- Data management: You help our customers build structured and reliable data processes, such as compliance with data protection regulations, definition of data governance policies, and implementation of data access and security concepts.

- Close collaboration: In close coordination with IT and business units, you actively shape projects - as architect, lead developer, or product owner.

- Actively participate in presales: You are responsible for technical solution concepts in proposals, accompany proposal presentations, and conduct workshops.

Requirements

- Experience in Data Platform projects, especially with Snowflake.

- Expertise in cloud data platforms like AWS, GCP, or Azure.

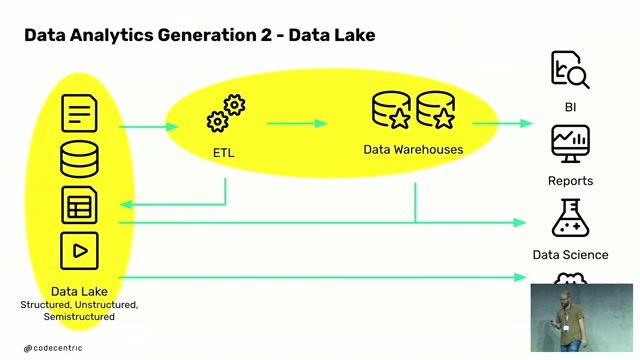

- Strong knowledge of ETL/ELT methodologies and programming skills., * Data platform architecture: You have experience in data platform projects, ideally with Snowflake, and master data platform concepts (DWH/Data Lake/Datalakehouse and Data Mesh) and modeling with Data Vault 2.0 or classic modeling patterns like Snowflake and Star.

- Cloud expertise: You bring experience in cloud data platforms and a cloud hyperscaler such as AWS, GCP, or Azure, and you have basic knowledge of cloud infrastructure (VPC, IAM, etc.); you are also familiar with IaC, e.g., with Terraform.

- ETL/ELT methodology: You develop robust batch and streaming data pipelines with high data quality, are familiar with integration tools like dbt, Fivetran, Matillion, Talend, or Informatica, and have programming skills in SQL, Python, Java, Scala, or Spark.

- Project and consulting experience: You have already gained experience as project manager, product owner, architect, or in a comparable role.

- Languages: Very good German and good English skills; French is an advantage.

Benefits & conditions

Our promise: You will feel comfortable with us! Collegial, communal, and on equal footing - we live exchange, team spirit, and respectful interaction. Diversity and different perspectives are valued just as much as you as a person. That and much more stand for our very special sense of "we." There is even a word for it: adessi.

- Learn and grow with us: With over 400 training offers and our digital learning platform, we support your continuous development.

- Experience true team spirit: Joint events (e.g., ski weekend and the annual eduCamp training trip), welcome days, company runs strengthen our cohesion and let you be part of the team from the start.

- Engagement that is rewarded: Your commitment pays off - with bonuses for referrals, presentations, supervision of theses, and attractive discounts via our corporate benefits portal.

Our culture and collaboration are characterized by mutual appreciation, recognition, and support. That connects us - even in the home office. Part-time work is possible by arrangement (80-100%). We have been repeatedly voted one of the best employers in Switzerland! Our extensive training offer and a transparent career level model for everyone ensure that your development with us does not stand still. Because growing together and being an opportunity provider is programmed in our DNA.