Alan Mazankiewicz

Fully Orchestrating Databricks from Airflow

#1about 5 minutes

Exploring the core features of the Databricks workspace

A walkthrough of the Databricks UI shows how to create Spark clusters, run code in notebooks, and define scheduled jobs with multi-task dependencies.

#2about 6 minutes

Understanding the fundamentals of Apache Airflow orchestration

Airflow provides powerful workflow orchestration with features like dynamic task generation, complex trigger rules, and a detailed UI for monitoring DAGs.

#3about 5 minutes

Integrating Databricks and Airflow with built-in operators

The DatabricksRunNowOperator and DatabricksSubmitRunOperator allow Airflow to trigger predefined or dynamically defined jobs in Databricks via its REST API.

#4about 3 minutes

Creating a custom operator for full Databricks API control

To overcome the limitations of built-in operators, you can create a generic custom operator by subclassing BaseOperator and using the DatabricksHook to make arbitrary API calls.

#5about 3 minutes

Implementing a custom operator to interact with DBFS

A practical example demonstrates how to use the custom generic operator to make a 'put' request to the DBFS API, including the use of Jinja templates for dynamic paths.

#6about 2 minutes

Developing advanced operators for complex cluster management

For complex scenarios, custom operators can be built to create an all-purpose cluster, wait for it to be ready, submit multiple jobs, and then terminate it.

#7about 5 minutes

Answering questions on deployment, performance, and tooling

The discussion covers running Airflow in production environments like Kubernetes, optimizing Spark performance on Databricks, and comparing Airflow to Azure Data Factory.

#8about 10 minutes

Discussing preferred data stacks and career advice

The speaker shares insights on their preferred data stack for different use cases, offers advice for beginners learning Python, and describes a typical workday as a data engineer.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

03:42 MIN

Exploring the dbt ecosystem and key integrations

Enjoying SQL data pipelines with dbt

02:49 MIN

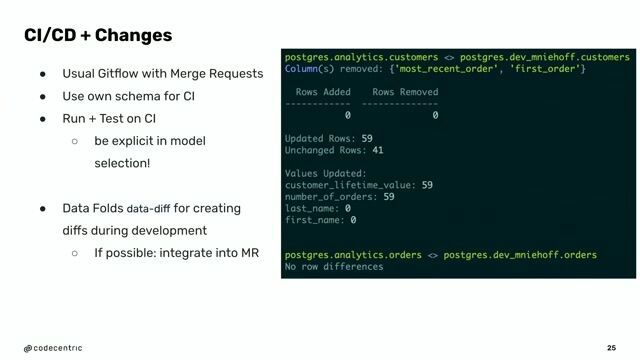

Q&A: MLOps tools for building CI/CD pipelines

Data Science in Retail

02:56 MIN

An introduction to the Apache Spark analytics engine

PySpark - Combining Machine Learning & Big Data

02:24 MIN

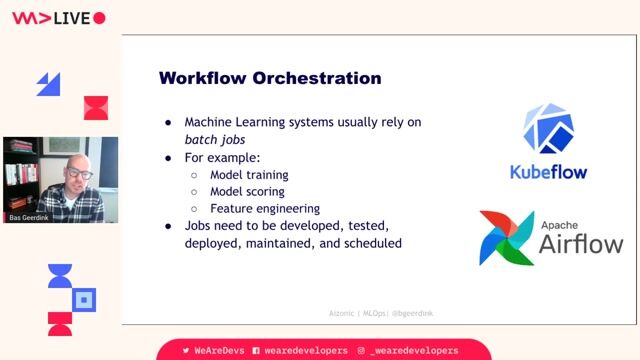

Orchestrating MLOps workflows for reliability

The state of MLOps - machine learning in production at enterprise scale

01:29 MIN

Q&A: Raw data formats and comparing dbt to Spark

Enjoying SQL data pipelines with dbt

05:17 MIN

Architecting an end-to-end event-driven workflow on Azure

Implementing an Event Sourcing strategy on Azure

01:29 MIN

Overview of the data and machine learning tech stack

Empowering Retail Through Applied Machine Learning

03:59 MIN

Modern data architectures and the reality of team size

Modern Data Architectures need Software Engineering

Featured Partners

Related Videos

43:26

43:26PySpark - Combining Machine Learning & Big Data

Ayon Roy

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

26:53

26:53Enjoying SQL data pipelines with dbt

Matthias Niehoff

46:43

46:43Convert batch code into streaming with Python

Bobur Umurzokov

45:58

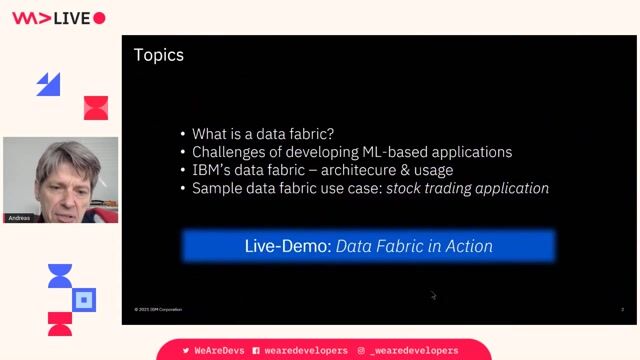

45:58Data Fabric in Action - How to enhance a Stock Trading App with ML and Data Virtualization

Andreas Christian

43:57

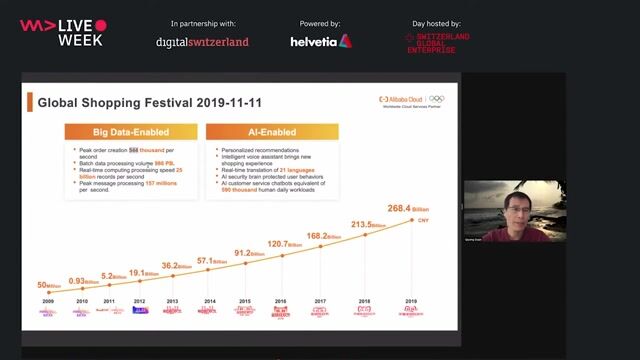

43:57Alibaba Big Data and Machine Learning Technology

Dr. Qiyang Duan

34:00

34:00Implementing continuous delivery in a data processing pipeline

Álvaro Martín Lozano

48:45

48:45Databases on Kubernetes

Denis Souza Rosa

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

RM IT Professional Resources AG

Zürich, Switzerland

€187-208K

Senior

PySpark