Sergio Perez & Harshita Seth

Adding knowledge to open-source LLMs

#1about 4 minutes

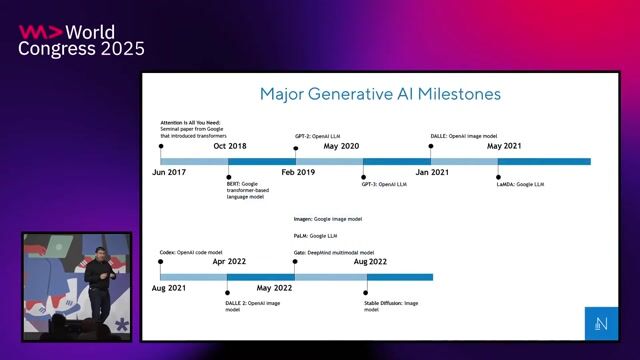

Understanding the LLM training pipeline and knowledge gaps

LLMs are trained through pre-training and alignment, but require new knowledge to stay current, adapt to specific domains, and acquire new skills.

#2about 5 minutes

Adding domain knowledge with continued pre-training

Continued pre-training adapts a foundation model to a specific domain by training it further on specialized, unlabeled data using self-supervised learning.

#3about 6 minutes

Developing skills and reasoning with supervised fine-tuning

Supervised fine-tuning uses instruction-based datasets to teach models specific tasks, chat capabilities, and complex reasoning through techniques like chain of thought.

#4about 8 minutes

Aligning models with human preferences using reinforcement learning

Preference alignment refines model behavior using reinforcement learning, evolving from complex RLHF with reward models to simpler methods like DPO.

#5about 2 minutes

Using frameworks like NeMo RL to simplify model alignment

Frameworks like the open-source NeMo RL abstract away the complexity of implementing advanced alignment algorithms like reinforcement learning.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

02:26 MIN

Understanding the core capabilities of large language models

Data Privacy in LLMs: Challenges and Best Practices

01:12 MIN

Introducing InstructLab for accessible LLM fine-tuning

Unlocking the Power of AI: Accessible Language Model Tuning for All

08:05 MIN

How large language models are trained

Inside the Mind of an LLM

05:18 MIN

Addressing the core challenges of large language models

Accelerating GenAI Development: Harnessing Astra DB Vector Store and Langflow for LLM-Powered Apps

02:55 MIN

Addressing the key challenges of large language models

Large Language Models ❤️ Knowledge Graphs

03:42 MIN

Using large language models as a learning tool

Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

02:21 MIN

The training process of large language models

Google Gemini: Open Source and Deep Thinking Models - Sam Witteveen

03:17 MIN

Using RAG to enrich LLMs with proprietary data

RAG like a hero with Docling

Featured Partners

Related Videos

27:11

27:11Inside the Mind of an LLM

Emanuele Fabbiani

29:20

29:20LLMOps-driven fine-tuning, evaluation, and inference with NVIDIA NIM & NeMo Microservices

Anshul Jindal

31:50

31:50Unlocking the Power of AI: Accessible Language Model Tuning for All

Cedric Clyburn & Legare Kerrison

30:04

30:04Self-Hosted LLMs: From Zero to Inference

Roberto Carratalá & Cedric Clyburn

28:38

28:38Exploring LLMs across clouds

Tomislav Tipurić

27:35

27:35Give Your LLMs a Left Brain

Stephen Chin

26:30

26:30Three years of putting LLMs into Software - Lessons learned

Simon A.T. Jiménez

31:25

31:25Unveiling the Magic: Scaling Large Language Models to Serve Millions

Patrick Koss

Related Articles

View all articles.png?w=240&auto=compress,format)

.gif?w=240&auto=compress,format)

.png?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Warmwind

€240K

Senior

PyTorch

Tensorflow

Machine Learning

Warmwind

€120K

PyTorch

Tensorflow

Machine Learning