Soroosh Khodami

Why and when should we consider Stream Processing frameworks in our solutions

#1about 2 minutes

Differentiating stream processing from event processing

Stream processing focuses on transforming continuous data streams, whereas event processing is about making decisions and triggering actions based on individual messages.

#2about 2 minutes

Handling out-of-order data with event time

Stream processing frameworks can reorder messages based on when the event actually occurred (event time) rather than when it was received (processing time).

#3about 2 minutes

Understanding message delivery guarantees

Frameworks provide mechanisms for exactly-once processing, which prevents duplicate message processing and is critical for financial systems.

#4about 3 minutes

Building data pipelines with sources and operators

Data pipelines are constructed by chaining operators that read from a source, apply transformations like filtering or joining, and write to a sink.

#5about 5 minutes

Using windowing to process continuous data streams

Windowing groups unbounded data into finite chunks for processing, with types like tumbling, sliding, and session windows serving different analytical needs.

#6about 1 minute

Joining data from multiple real-time streams

You can combine data from multiple streams using familiar concepts like inner joins and cross joins to create enriched data outputs.

#7about 2 minutes

Implementing complex logic with stateful processing

Stateful processing allows operators to store and retrieve data in memory, enabling complex logic like tracking user behavior or detecting fraud patterns over time.

#8about 1 minute

Overview of popular stream processing frameworks

Key frameworks for stream processing include Apache Flink, Apache Beam, Spark Streaming, and Kafka Streams, with cloud platforms offering managed services.

#9about 4 minutes

Comparing Spring Boot vs Apache Beam performance

A practical benchmark shows that while Apache Beam offers higher throughput, a standard Spring Boot and Redis setup can be sufficient and more cost-effective for many use cases.

#10about 3 minutes

Weighing the benefits and significant drawbacks

While powerful, stream processing frameworks are complex to learn, difficult to maintain and debug, and have a steep learning curve for development teams.

#11about 1 minute

Real-world use cases for stream processing

Stream processing is heavily used in industries like gaming for anti-cheat systems, IoT for real-time traffic data, and finance for fraud detection.

#12about 2 minutes

Learning resources and communicating with stakeholders

Before adopting these complex frameworks, it is crucial to manage stakeholder expectations about the high cost and difficulty of implementing and changing data pipelines.

Related jobs

Jobs that call for the skills explored in this talk.

Matching moments

04:18 MIN

Why modern applications adopt event streaming

Event Messaging and Streaming with Apache Pulsar

04:23 MIN

A traditional approach to streaming with Kafka and Debezium

Python-Based Data Streaming Pipelines Within Minutes

04:18 MIN

Using streaming data to power real-time agent applications

Unlocking Value from Data: The Key to Smarter Business Decisions-

07:00 MIN

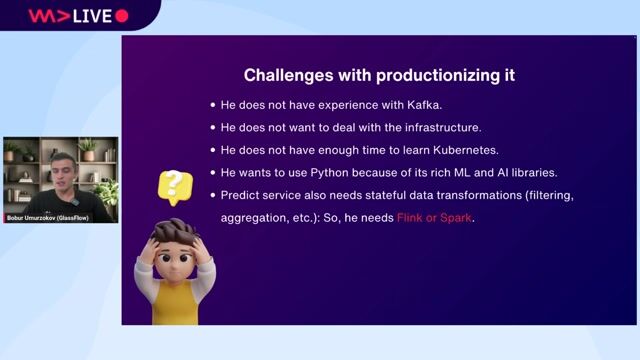

Exploring the operational complexity of Kafka and Flink

Python-Based Data Streaming Pipelines Within Minutes

03:15 MIN

Understanding the challenges of adopting real-time data streaming

Python-Based Data Streaming Pipelines Within Minutes

01:31 MIN

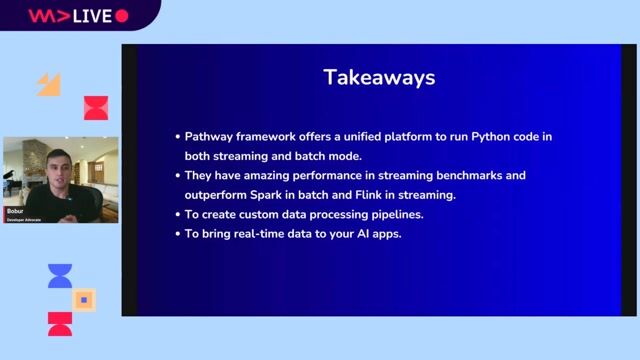

Key takeaways for modern data processing

Convert batch code into streaming with Python

02:57 MIN

Understanding the purpose and core use cases of Kafka

Let's Get Started With Apache Kafka® for Python Developers

03:41 MIN

Decoupling microservices with event streams

From event streaming to event sourcing 101

Featured Partners

Related Videos

46:43

46:43Convert batch code into streaming with Python

Bobur Umurzokov

45:48

45:48Kafka Streams Microservices

Denis Washington & Olli Salonen

39:04

39:04Python-Based Data Streaming Pipelines Within Minutes

Bobur Umurzokov

57:19

57:19Event Messaging and Streaming with Apache Pulsar

Mary Grygleski

27:33

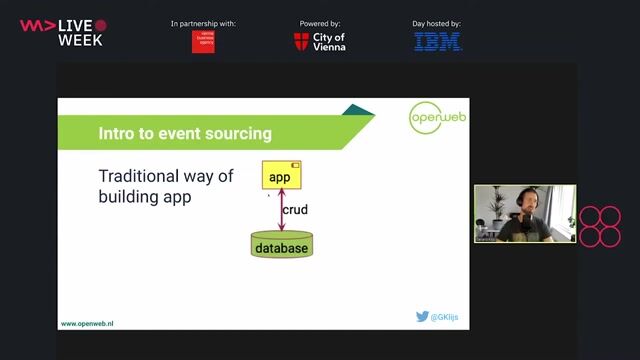

27:33From event streaming to event sourcing 101

Gerard Klijs

30:36

30:36In-Memory Computing - The Big Picture

Markus Kett

52:15

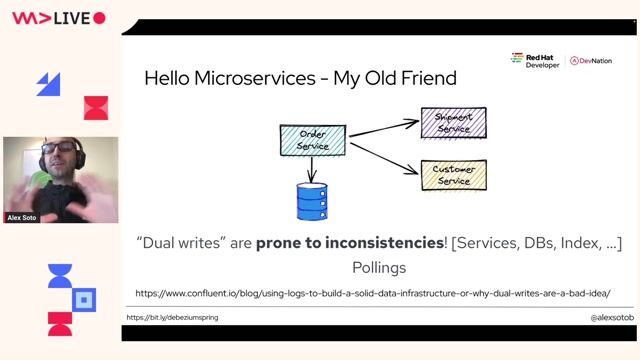

52:15Practical Change Data Streaming Use Cases With Debezium And Quarkus

Alex Soto

57:38

57:38Are you done yet? Mastering long-running processes in modern architectures

Bernd Ruecker

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

AUTO1 Group SE

Berlin, Germany

Intermediate

Senior

ELK

Terraform

Elasticsearch

CONTIAMO GMBH

Berlin, Germany

Senior

Python

Docker

TypeScript

PostgreSQL

SYSKRON GmbH

Regensburg, Germany

Intermediate

Senior

.NET

Python

Kubernetes

iov42

Vienna, Austria

Senior

Java

Spring

Ethereum

PostgreSQL

Blockchain

+3